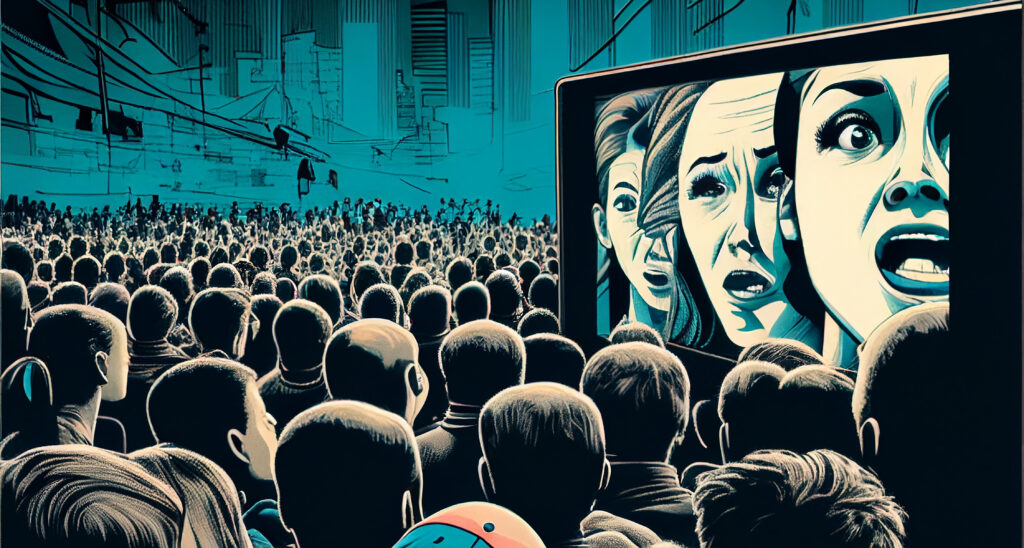

In a now instantly connected world, the activities of governments and their intelligence arms, official and unofficial, have garnered both growing attention and concern. Recent events like the 2016 US elections, COVID-19, the war in Ukraine, and the Israel-Palestine war, have underscored the prevalence of misinformation and disinformation in contemporary news and online discourse, even infiltrating reputable news outlets. While the concept of fake news may seem like a modern phenomenon, it is far from new. (Disinformation is false information created to intentionally and maliciously deceive audiences.)

Throughout history, governments and militaries have used various forms of deception to gain popular support to further their objectives. The term active measures was coined by the Soviet Union in the 1950s to describe a wide range of covert and deniable political influence and subversion operations, including the establishment of front organizations, the backing of friendly political movements, the orchestration of domestic unrest, and the spread of disinformation.

The end of the Cold War and the fall of the Soviet Union brought a substantial reduction in Russian active measures. However, Vladimir Putin’s rule over the last decade allowed for the revival and reinvention of these techniques. The digital age, with the advent of online news platforms and social media, has amplified the ability of malevolent actors to influence the public.

In the past, articles designed to spread disinformation may have been placed in an obscure foreign newspaper and gradually proliferated to audiences around the world. In the age of information, disinformation is instant and sharable across much wider swaths of the population. Emerging technologies like Artificial Intelligence, along with stolen or purchased personal data, make the process of creating content tailored to specific audiences even faster and more convincing.

By 2019, Oxford University’s Computational Propaganda Research Project identified organized “social media manipulation campaigns” being operated in 70 different countries, many working to suppress human rights, discredit political opposition, or drown out political dissent. By 2021, that figure had grown to 81, with 30 of those countries confirmed to be leveraging user data to target specific audiences, and 59 confirmed to be using state-sponsored “trolls” to attack political opponents or activists.

That means that nearly 42% of all countries have been directly linked to using the digital theater to advance strategic disinformation.

While the problem is clearly global, China has applied active measures to further its agenda at an unprecedented scale. China’s United Front Work Department (UFWD), an often discreet and multifaceted organ of the Chinese Communist Party (CCP), plays a pivotal role in shaping both domestic and international narratives, influencing individuals and organizations. Its actions span the spectrum from subtle diplomacy to covert operations, all with the overarching goal of safeguarding and expanding the party’s interests.

“The CCP uses what it calls the work of a ‘United Front’ of organizations and constituencies to co-opt and neutralize sources of potential opposition to its policies and authority,” a U.S. State Department report on China’s Coercive Tactics Abroad states. “The CCP’s United Front Work Department (UFWD) is responsible for coordinating domestic and foreign influence operations, through propaganda and manipulation of susceptible audiences and individuals.”

Related: The US Intelligence Community has a new strategy for the future

Fake news has created distrust in media, calls to action or inaction in highly contentious political debates, and has even affected elections, but it is not the most insidious aspect of these “active measures” that governments use to influence the public.

Behavioral science methods, like those used in advertising campaigns, are being applied to subtly nudge beliefs and actions in order to influence, disrupt, or hijack the thought processes of audiences and achieve specific goals.

For example, a social media page may gradually change its subject matter from adorable cat memes to pro-authoritarian content or a Tik-Tok star may shift from current events to discussing the rights and policies of a particular group. According to the aforementioned Oxford University analysis from 2021, out of the 81 countries that were identified as operating digital disinformation campaigns, at least 48 of them included state actors working directly with private “strategic communications” firms – in other words, advertising agencies – to this end.

Related: China presents an ‘urgent challenge’ Pentagon’s new weapons of mass destruction strategy says

This method of content delivery is intended to seem organic in both creation and delivery, leveraging distrust in traditional media institutions to shape narratives in a way that seems free of governmental or corporate influence, despite being developed or funded directly by state actors.

The effects of such campaigns could be far-reaching: They could influence militaries’ potential to recruit and retain members; undermine popular support for critical military actions; and even create insider threats in organizations.

As warfighters and the populations that support them advance into an era where tactics increasingly rely on influencing the mind, mental strength may prove to be the most effective weapon.

Read more from Sandboxx News

- F-35 versus A-10 showdown revived as new documents come to light

- SR-72? Hints of a new Skunk Works spy plane reignite rumors of a Blackbird successor

- Accounts of daily life with the Delta Force through 18 months of global training

- The Tavor rifle was made for the Israeli military’s unique situation – Service rifles from around the world

- America’s NGAD fighter emerged from a classified billion-dollar X-Plane program